.png)

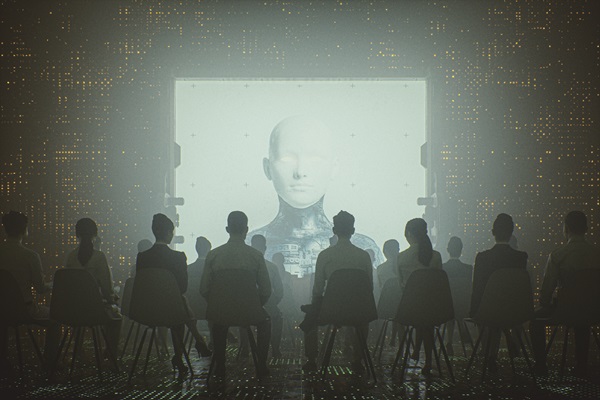

The Future of Leadership Need Not Be Algorithmic

Human-AI collaboration is not a technological milestone. It is actually a societal test for which our current models of leadership are not ready for.

Dr. Srinath Sridharan is a Corporate Advisor & Independent Director on Corporate Boards. He is the author of ‘Family and Dhanda’.

July 1, 2025 at 1:16 AM IST

The central question of our era is not whether machines will outpace humans. It is whether the frameworks through which we lead, govern, and organise are fit for the world we are building. The debate around artificial intelligence and leadership often distracts us with surface anxieties—algorithmic bias, automation risk, emotional intelligence add-ons—while ignoring a more fundamental issue: today’s leadership structures are unprepared for both technological partnership and human complexity.

We are not merely confronting the challenge of coexisting with machines. We are confronting the reality that our leadership models were already strained—transactional, extractive, and increasingly dislocated from meaning. The rise of AI has not so much disrupted these models as it has illuminated their inadequacy.

For too long, leadership, particularly in capitalist economies, has been framed as performance without proximity. Authority has decoupled from responsibility. Influence has been measured in scale, not substance. Burnout, precarity, and cultural erasure are not unfortunate side-effects of progress; they are symptoms of a system optimised for efficiency rather than ethics. The algorithm did not create this dynamic. It merely systematised it.

This tension is especially visible in India, where the lived ethos of work has historically been rooted in relational value. In our civilisational traditions, the role of the teacher, the artisan, the storyteller carried intrinsic dignity, not just economic utility. These roles were embedded in context, community, and continuity. Today, however, we are rapidly replacing that depth with digital surrogates. A folk performer adapting their craft to fit social media formats is not simply innovating; they are negotiating relevance within a system that does not recognise their original idiom.

When we celebrate the “creator economy” without interrogating its design, we obscure the precarity built into it. Platforms reward visibility, not veracity. Algorithms recalibrate incentives weekly, leaving no room for reflection, depth, or repair. Leadership, under such a model, becomes an exercise in managing volatility rather than nurturing ecosystems.

In policy circles and corporate boardrooms, there is now talk of bringing “psychological safety” to digital labour. But safety is not achieved through language alone. It requires reconfiguring the structural foundations of value creation. It is not safety if one’s rent still depends on a viral post. It is not autonomy if one’s authenticity must be contorted to algorithmic preference. Leadership that measures engagement while ignoring erosion—of trust, of identity, of wellbeing—cannot claim to be adaptive.

Nor can it be called “visionary” to import leadership models from global tech companies and impose them onto Indian socio-economic realities. Netflix is often cited as a case study in agility. But its success is architectural. It scales content through data-driven design. It is not an institution negotiating cultural memory, linguistic nuance, or intergenerational legitimacy. When a Kathakali artist reorients their storytelling to fit streaming platforms, what we witness is not cultural ascension. It is context collapse.

India’s opportunity is not to replicate Silicon Valley playbooks. It is to lead through a different grammar—one that acknowledges informal social contracts, values moral capital, and is anchored in pluralistic sensibilities. The idea of leadership here has historically been interdependent, not individualistic. If we allow that inheritance to be overwritten by transactional metrics, we do not become global. We become rootless.

We must also contend with a subtler erosion: the vanishing appreciation of what cannot be quantified. India’s informal economic and social networks—between teacher and student, buyer and artisan, neighbour and worker—have long carried the emotional and trust capital that sustains resilience. These were not inefficiencies to be fixed; they were buffers against brittleness. As we replace these with dashboards and predictive analytics, we risk losing the intangible surplus that has quietly stabilised us for decades.

This is why the presumed binary between technology and humanity is not only false, it is dangerous. It suggests that the two are fundamentally incompatible, when the truth is that our imagination, not the machine, is the limiting factor. There is nothing inherently inhuman about AI. But if our systems lack empathy, our technologies will lack care. If our leaders are distant, our data systems will be indifferent. AI does not invent our values. It scales them.

Calls for human-AI collaboration often frame it as a technical challenge. But the real test is cultural. We cannot partner meaningfully with machines if we have not yet learned to partner meaningfully with one another. Our current organisational cultures often fail at even basic cooperation, across hierarchies, disciplines, geographies. To expect more enlightened collaboration with AI in such a context is to confuse access with readiness.

We must stop romanticising AI as an impartial oracle or neutral co-pilot. It is neither. It is a mirror. It reflects and magnifies the assumptions we feed it. If our leadership models are built on speed, AI will accelerate haste. If our systems reward compliance over curiosity, AI will institutionalise mediocrity. And if our organisations prioritise reach over meaning, AI will help us scale irrelevance at unprecedented velocity.

The true task, then, is not to train AI to be more human. It is to train ourselves to lead with greater humanity. That requires more than updated skill sets. It requires moral clarity. It requires leadership that understands influence not as domination but as stewardship. That sees people not as capital to be extracted but as citizens to be respected. That recognises the future not just as a marketplace, but as a shared inheritance.

Until we recalibrate our metrics to reflect that vision, we will continue to measure the wrong things. We will build educational tools that gamify trivia, not cultivate wisdom. Media products that optimise outrage, not truth. Business models that chase scale but shed soul. What we choose to measure decides what we multiply and we are currently multiplying shallowness.

This erosion of depth is not just cultural. It is economic. A workforce perpetually anxious, self-curating, and exhausted cannot sustain innovation, loyalty, or institutional trust. The cost of burnout will not only be paid in therapy sessions, it will show up in declining productivity, disengagement, and the erosion of public faith in leadership itself.

Reinvention will not come from apps or automation. It will come from courage. The courage to ask: Why do we treat emotional depletion as an operational issue rather than a systemic failure? Why is creative health seen as discretionary rather than foundational? Why do we expect dignity to emerge from structures designed for disposability?

India does not lack talent, tradition, or technology. What we lack is a leadership paradigm that sees interdependence as strength, not inefficiency. That treats the sacredness of work as more than a slogan. That understands AI not as a saviour, but as a signal. A signal that the systems we have built are due for profound introspection.

The algorithm will not judge us. But it will reflect us. And in that reflection, we may find not our future, but our unfinished responsibilities.

Also read:

Dear C-Suite, Your Team’s Real Boss Is The Algorithm